Difference between revisions of "Data decompression chain"

(→3D modeling: resolved mix-up of volume based modeling with CSG scene graph) |

m |

||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

{{site specific term}} | {{site specific term}} | ||

| − | The data decompression chain is the sequence of expansion steps from | + | The '''"data decompression chain"''' is the sequence of expansion steps from |

* very compact highest level abstract blueprints of technical systems to | * very compact highest level abstract blueprints of technical systems to | ||

* discrete and simple lowest level instances that are much larger in size. | * discrete and simple lowest level instances that are much larger in size. | ||

| − | + | = 3D modeling = | |

| − | + | ||

| − | + | ||

| + | Main page: [[3D modeling]] | ||

| + | |||

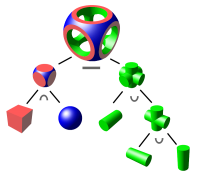

| + | [[File:Csg tree.png|200px|thumb|right|[[Constructive solid geometry]] graph (CSG graph). Today (2017) often still at the top of the chain.]] | ||

| + | |||

| + | [[Programmatic high level 3D modelling]] representations with code can <br> | ||

| + | considered to be a highly compressed data representation of the target product. <br> | ||

| + | |||

| + | The principle rule of programming which is: '''"don't repeat yourself"''' does apply. | ||

| + | * Multiply occurring objects (including e.g. rigid body [[crystolecule]] parts) are specified only once plus the locations and orientations (poses) of their occurrences. | ||

| + | * Curves are specified in a not yet discretized (e.g. not yet triangulated) way. See: [[Non-destructive modelling]] | ||

| + | * Complex (and perhaps even dynamic) assemblies are also encoded such that they complexly unfold on code execution. <br> Laying out [[gemstone based metamaterial]]s in complex dynamically interdigitating/interlinking/interweaving ways. | ||

| + | |||

| + | Note: '''"Programmatic"''' does not necessarily mean purely textual and in: "good old classical text editors". <br> | ||

| + | [[Structural editor]]s might and (as to the believe of the author) eventually will take over <br> | ||

| + | allowing for an optimal mixing of textual and graphical programmatic representation of target products in the "[[integrated deveuser interface]]s". | ||

| + | |||

| + | = The decompression chain in [[gem-gum factories]] (and 3D printers) = | ||

| + | |||

| + | The list goes: | ||

| + | * from top high level small data footprint | ||

| + | * to bottom low level large data footprint | ||

| + | ----- | ||

* high language 1: functional, logical, connection to computer algebra system | * high language 1: functional, logical, connection to computer algebra system | ||

* high language 2: imperative, functional | * high language 2: imperative, functional | ||

| Line 19: | Line 37: | ||

* Primitive signals: step-signals, rail-switch-states, clutch-states, ... | * Primitive signals: step-signals, rail-switch-states, clutch-states, ... | ||

| − | + | {{todo|add details to decompression chain points}} | |

| − | + | == High level language == | |

| − | + | ||

| − | === 3D modeling & functional programming | + | Batch processing style programmatic 3D modelling lends itself exceptionally nicely to <br> |

| + | side effect free programmatic representation. <br> | ||

| + | Side effect free means: Functions always give the same output when given the same explicit input. <br> | ||

| + | There is no funny hidden implicit global variable passing business going on in the background. | ||

| + | |||

| + | Sticking to code that is by some means enforced to be free of side effects comes with great benefits like: | ||

| + | * mathematical substitutability | ||

| + | * irrelevance of order of code lines | ||

| + | * narrowing down errors predictably leading to the problem spot <br>no horrid intractable errors that a excellent in refusing to be narrowed down | ||

| + | * ... | ||

| + | |||

| + | One side effect free system of today (2021) is [[OpenSCAD]] but <br> | ||

| + | it lacks in many other regards (outlined on the linked page) <br> | ||

| + | There are quite a few clones of OpenSCAD attempting to fix these other regards but <br> | ||

| + | more often than not at the extremely high cost of abandoning the guaranteed lack of side effects. <br> | ||

| + | In case of the case of embedded doman specific languages (EDSLs) this weakness is usually inherited from the the host language. <br> | ||

| + | (Javascript, Python, your imperative side effect laden language of choice ...) | ||

| + | |||

| + | Another side effect free 3D modelling system of today (2021) is [[implicitCAD]] but <br> | ||

| + | it lacks in many other regards (outlined on the linked page). <br> | ||

| + | Perhaps even more so than [[OpenSCAD]] but in other areas. | ||

| + | |||

| + | All of today's (2021) [[graphical point and click 3D modelling systems]] seem hopelessly insufficient compared to <br> | ||

| + | what would be desirable for modelling something as complex as a [[gemstone based on chip factory]].<br> | ||

| + | This is mostly because the [[visual-textual rift problem]] is still far (decades?) from being resolved yet. | ||

| + | |||

| + | == Quadric nets == | ||

| + | |||

| + | This is highly unusual but seems interesting (state 2021). <br> | ||

| + | One could say that in some way quadric nets are an intermediary representation of 3D geometry lying between | ||

| + | * general arbitrary function representation and | ||

| + | * triangulated representation. | ||

| + | |||

| + | Piecewise defined quadric mesh surfaces can be made to be one time continuously differentiable (C<sup>1</sup>). <br> | ||

| + | See: [https://en.wikipedia.org/wiki/Differentiable_function#Differentiability_classes Wikipedia Differentiability_classes])<br> | ||

| + | {{todo|Find if pretty much all surfaces of practical interest van be "[[quadriculation|quadriculated]]" just as they can be "triangulated"}} | ||

| + | |||

| + | The second derivative of a function representation (in form of a scalar field) gives the Hesse matrix where | ||

| + | * it's eigenvectors give the principle curvatures and | ||

| + | * it's determinant giving the Gaussian curvature (smaller zero: saddle, equal zero: or valley/plane, bigger zero: or hill/through) | ||

| + | |||

| + | Side-note: Projections from 3D down to 2D form conic sections of same or lower degree. <br> | ||

| + | These can be re-extrusion to 3D. It may be interesting to implement this functionality in programmatic 3D modelling tools.<br> | ||

| + | |||

| + | It seems that calculating convex hulls is only possible for a few special cases. <br> | ||

| + | This is different for triangle meshes where convex hulls are always possible. <br> | ||

| + | Convex hulls can be quite useful in 3D modelling, but it seems they are only applicable quite far down the [[decompression chain]]. | ||

| + | |||

| + | == Triangle nets == | ||

| + | |||

| + | * There is an enormous mountain of theoretical work about them. | ||

| + | * Often it might be desirable to skip this step and go directly <br>–– from some [[functional representation]] <br>–– to toolpaths. <br> <small>Today's (2021) FDM/FFF 3D-printers pretty much all go through the intermediary representation of triangle-meshes.</small> | ||

| + | * There are difficult since they offer a gazillion ways of bad geometries <br><small>(degenerate triangles, more than two edges meeting, vertices on edges, mesh holes, flipped normals, ...)</small> | ||

| + | |||

| + | == Tool-paths == | ||

| + | |||

| + | Related: | ||

| + | * [[Tracing trajectories of component in machine phase]] | ||

| + | * [[Sequence of zones]] | ||

| + | * [[Mechanosynthesis core]] | ||

| + | |||

| + | == Primitive signals == | ||

| + | |||

| + | The many places Where the control-subsystem finally comes together with the power-subsystem. <br> | ||

| + | Signals are amplified to do the driving of motions but also possibly energy recuperation from the motions of the nano-robotics. <br> | ||

| + | Related: | ||

| + | * [[Coumputation subsystem]] | ||

| + | * [[Energy subsystem]] – [[Drive subsystem of a gem-gum factory]] | ||

| + | |||

| + | = (Compilation) Targets = | ||

| + | |||

| + | Beside the actual physical product another desired product of the code is just a digital preview. <br> | ||

| + | So there are several desired outputs for one and the same code. <br> | ||

| + | <small>Maybe useful for compiling the same code to different targets (as present in this context): [[Compiling to categories (Conal Elliott)]]</small> | ||

| + | |||

| + | '''Possible desired outputs include but are not limited to:''' | ||

| + | * '''the actual physical target product object''' | ||

| + | * virtual simulation of the potential product (2D or some 3D format) | ||

| + | * approximation of output in form of [[utility fog]]? | ||

| + | |||

| + | == 3D modeling & functional programming == | ||

Modeling of static 3D models is purely declarative. | Modeling of static 3D models is purely declarative. | ||

| − | * example: OpenSCAD | + | * example: [[OpenSCAD]] |

... | ... | ||

| − | + | = Similar situations in today's computer architectures = | |

* high level language -> | * high level language -> | ||

| Line 37: | Line 134: | ||

* actual actions of the target data processing machine | * actual actions of the target data processing machine | ||

| − | + | = Bootstrapping of the decompression chain = | |

| − | One of the [[common misconceptions| | + | One of the [[common misconceptions|concerns]] regarding the feasibility of [[advanced productive nanosystem]]s |

| − | is that all the necessary data cannot be fed to the [[mechanosynthesis core]]s and [[crystolecule assembly robotics]] | + | is the worry that that all the necessary data cannot be fed to |

| + | * the [[mechanosynthesis core]]s and | ||

| + | * the [[crystolecule assembly robotics]] | ||

| + | <small>The former are mostly hard coded and don't need much data by the way.</small> | ||

| − | For example this size comparison in [https://youtu.be/Q9RiB_o7Szs?t=13m35s E. Drexlers TEDx talk (2015) 13:35] | + | For example this size comparison in [https://youtu.be/Q9RiB_o7Szs?t=13m35s E. Drexlers TEDx talk (2015) 13:35] can (if taken to literally) <br> |

| − | can (if taken to literally) | + | lead to the misjudgment that there is an fundamentally insurmountable data bottleneck. <br> |

| + | Of course trying to feed yotabits per second over those few pins would be ridiculous and impossible, but that is not what is planned. <br> | ||

| + | {{wikitodo| move this topic to [[Data IO bottleneck]]}} | ||

| − | We already know how to avoid such a bottleneck. | + | We already know how to avoid such a bottleneck. <br> |

| − | Albeit we program computers with our fingers delivering just a few bits per second computers now perform petabit per second internally. | + | Albeit we program computers with our fingers delivering just a few bits per second <br> |

| + | computers now perform petabit per second internally. | ||

| − | The goal is reachable by gradually building up a hierarchy of decompression steps. | + | The goal is reachable by gradually building up a hierarchy of decompression steps. <br> |

| − | The most low level most high volume data is generated internally and locally very near where it's finally "consumed". | + | The most low level most high volume data is generated internally and locally very near to where it's finally "consumed". |

| − | = | + | = Decompression chain in nature = |

| − | * [[ | + | There is also necessarily some sort of decompression chain <br> |

| − | * | + | in biological living systems like large animals and plants <br> |

| − | * The reverse: | + | It is very different (and incompatible) to the compression chain that we can expect to emerge in [[advanced productive nanosystem]]s though. |

| − | * In the case of [[synthesis of food]] the vastly different '''decompression chain''' between biological systems and advanced diamondoid nanofactories leads to the situation that nanofactories cannot synthesize exact copies of food down to the placement of every atom. See [[Food structure irrelevancy gap]] for a viable alternative. | + | |

| + | Larger scale assembly in living biological systems is very different to [[convergent assembly]] in [[nanofactories]]. <br> | ||

| + | In plans there are things like the [https://en.wikipedia.org/wiki/Meristem meristem tissue]. <br> | ||

| + | And large scale parts cannot be shuffled around after the fact of large scale assembly. <br> | ||

| + | |||

| + | Small scale assembly in living biological systems is very different to assembly in [[nanofactories]]. | ||

| + | * Proteins after their proteosynthesis plus folding-process are <br>integrated into more or less wobbly cell membranes or directly unconstrainedly into cytoplasma. | ||

| + | * [[Crystolecule]]s are after their [[mechanosynthesis]] are <br>placed into a specific spot that is specifiable in a global coordinate system. | ||

| + | |||

| + | == Why are natural and artificial decompression chains fundamentally incompatible? == | ||

| + | |||

| + | In case of the [[synthesis of food]] take the (exceptionally challenging) example of an apple. <br> | ||

| + | It would obviously not be practically possible (and not sane) to store the location of ever atom. | ||

| + | * (A) that is waaaaaay too much data – as in the natural case we need a decompression chain | ||

| + | * (B) an apple is mostly liquid water with atoms zapping around like crazy at room temperature <br>– well one could shock-cryo-freeze an apple maybe | ||

| + | |||

| + | '''[[Synthesis of food]]:'''<br> | ||

| + | An obvious approach would be to do data-compression on the location of molecules of the shock frozen apple <br> | ||

| + | data-compression like it's e.g. done in lossy image compression <br> | ||

| + | (or in the future with some more advanced AI based compression means ~ see deep-dream)<br> | ||

| + | Such artificial data-compression would lead to some very weird and unnatural artifacts. <br> | ||

| + | But that should be ok in the case of food. | ||

| + | * As long as nourishment value and smell (type of molecules on smallest scales) is sufficiently close and | ||

| + | * As long as the larger scale texture is sufficiently close. <br>No one wants a terrifying artificial Frankenstein apple in the uncanny valley of food texture, right? <br>See: [[Why identical copying is unnecessary for foodsynthesis]] | ||

| + | |||

| + | '''Synthesis of replacement organs:'''<br> | ||

| + | In the case of living replacement organs weird compression artifacts <br> | ||

| + | (like e.g. breaking cell walls or putting molecules at insufficiently accurate places) would be a showstopper problem.<br> | ||

| + | But that problem is dwarfed by the problem that the mechanosynthesis of glassy shock frozen water <br> | ||

| + | * sounds exceptionally difficult and may well be impossible - temporary holding of many weak many hydrogen bonds in odd barely stable angles | ||

| + | * would certainly be astronomically slow due to a ever shrinking surface area per volume to build on due to the lack of availability of convergent assembly | ||

| + | |||

| + | '''The proper way (proper decomprssion-chain) to synthesize biological products:'''<br> | ||

| + | The much much more practical and proper way to go is microscale paste 3D printing of cultivated cells.<br> | ||

| + | Having the DNA of an apple tree as the building plan for an apple does not help in the artificial synthesis of an apple<br> | ||

| + | with an [[gem-gum tech]] based cell cultivating food synthesizer that operates more like a microscale paste printer.<br> | ||

| + | Just a program/buildplan that is meant for such a food-synthesizer woud not work as a seed for an apple tree that can be buried ans sprouted. | ||

| + | |||

| + | One could however eventually make a system that behaves like a tree and sprouts gemstone based food synthesizers. | ||

| + | More as a gag than practical. | ||

| + | |||

| + | == Philosophical == | ||

| + | |||

| + | The end result of natures evolution process led to some incredibly high degrees of data-compression. <br> | ||

| + | Like the miraculous fact that the DNA build-plan for a whole human being fits into just a few GB of data (ignoring microbiome and epigenetics). | ||

| + | |||

| + | === high data compression may limit degree of seperation of concerns – mabe? === | ||

| + | |||

| + | {{speculativity warning}} | ||

| + | |||

| + | The extremely high levels of data-compression in living organisms may have come at the cost of <br> | ||

| + | not much attainment of [[separation of concerns]] over the course of evolution. <br> | ||

| + | The very non-local folding-behavior of proteins may be an example of such lack of [[separation of concerns]].<br> | ||

| + | Albeit in many cases we just might not understand it yet. | ||

| + | |||

| + | But perhaps just sufficient separation of concerns must have been attained such <br> | ||

| + | that evolution can sufficiently well adapt to changing environmental conditions?? | ||

| + | |||

| + | It's not that in nature there is no separation of concerns at all in the DNA of living organisms <br> | ||

| + | Large scale continuous sections even sometimes correspond to whole body parts (as experiments with fruit-flies showed) | ||

| + | |||

| + | === Artificial vs natural === | ||

| + | |||

| + | Regarding [[separation of concerns]]: | ||

| + | * The buildup of the natural data-compression-chain (evolution) is top down | ||

| + | * The buildup of artificial data-compression-chain (3D modelling) is bottom up | ||

| + | |||

| + | '''System design by natural evolution is pretty brutal:''' | ||

| + | * initially does not care that it cannot change one parameter without changing half a dozen others too in weird ways '''(top down)''' | ||

| + | * The testing is fully offloaded to the end-users and running into a bug can literally be fatal | ||

| + | * DNA "source-code" is very much not designed to be read and understood | ||

| + | * There is no documentation whatsoever | ||

| + | |||

| + | '''Systems designed by humans:''' | ||

| + | * starts with simple base cases where all parameters can be adjusted cleanly separatedly '''(bottom up)''' | ||

| + | * then finds ways to make the code more general – less code covering more cases – can be partly related to loss of separation of concerns | ||

| + | * ideally the special cases are kept around for documentation on how and why the final "[[abstract nonsense]]" came to be | ||

| + | |||

| + | Given that human system design processes | ||

| + | * can build up slowly and steadily and given they | ||

| + | * are not limited by hat individual humans can understand | ||

| + | complexity of systems created by humans can steadily and stealthy creep up to levels that <br> | ||

| + | at some point becomes so advanced that they can well be considered life like. | ||

| + | |||

| + | <small>Related: [[Common_misconceptions_about_atomically_precise_manufacturing#We_would_need_god_like_skills_to_create_life-like_nanotechnology]]</small> | ||

| + | |||

| + | = Related = | ||

| + | |||

| + | * '''[[Control hierarchy]]''' | ||

| + | * [[Data compression]] | ||

| + | * The reverse: Finding a good compression/encoding. <br>While decompressing is a technique compressing is an art – (a vague analog to how derivation is a technique and integration an art)<br> See: [[the source of new axioms]] – {{speculativity warning}} | ||

| + | * '''[[Why identical copying is unnecessary for foodsynthesis]]''' and [[Synthesis of food]] <br>In the case of [[synthesis of food]] the vastly different '''decompression chain''' between biological systems and advanced diamondoid nanofactories leads to the situation that nanofactories cannot synthesize exact copies of food down to the placement of every atom. See [[Food structure irrelevancy gap]] for a viable alternative. | ||

* constructive corecursion | * constructive corecursion | ||

| + | * [[Data IO bottleneck]] | ||

| + | * [[Compiling to categories (Conal Elliott)]] | ||

| + | * mergement of GUI-IDE & code-IDE | ||

| + | ----- | ||

| + | * [[Constructive solid geometry]] | ||

| + | * [[Design of crystolecules]] | ||

| + | ----- | ||

| + | * [[The three axes of the Center for Bits and Atoms]] | ||

| + | |||

| + | = External Links = | ||

| + | |||

| + | == Wikipedia == | ||

| + | |||

| + | * [https://en.wikipedia.org/wiki/Solid_modeling Solid_modeling], [https://en.wikipedia.org/wiki/Computer_graphics_(computer_science) Computer_graphics_(computer_science)] | ||

| + | * [https://en.wikipedia.org/wiki/Constructive_solid_geometry Constructive_solid_geometry] | ||

| + | * Related to volume based modeling: [https://en.wikipedia.org/wiki/Quadric Quadrics] [https://en.wikipedia.org/wiki/List_of_mathematical_shapes#Quadrics (in context of mathematical shapes)] [https://en.wikipedia.org/wiki/Differential_geometry_of_surfaces#Quadric_surfaces (in context of surface differential geometry)] | ||

| + | * Related to volume based modeling: [https://en.wikipedia.org/wiki/Level-set_method Level-set_method] [https://en.wikipedia.org/wiki/Signed_distance_function Signed_distance_function] | ||

| + | * Avoidable in steps after volume based modeling: [https://en.wikipedia.org/wiki/Triangulation_(geometry) Triangulation_(geometry)], [https://en.wikipedia.org/wiki/Surface_triangulation Surface_triangulation] | ||

| + | ---- | ||

| + | * [https://en.wikipedia.org/wiki/Boundary_representation Boundary representation] | ||

| + | * [https://en.wikipedia.org/wiki/Function_representation Function representation] | ||

| + | |||

[[Category:Information]] | [[Category:Information]] | ||

| + | [[Category:Programming]] | ||

| + | [[Category:Software]] | ||

Latest revision as of 09:34, 5 May 2024

The "data decompression chain" is the sequence of expansion steps from

- very compact highest level abstract blueprints of technical systems to

- discrete and simple lowest level instances that are much larger in size.

Contents

3D modeling

Main page: 3D modeling

Programmatic high level 3D modelling representations with code can

considered to be a highly compressed data representation of the target product.

The principle rule of programming which is: "don't repeat yourself" does apply.

- Multiply occurring objects (including e.g. rigid body crystolecule parts) are specified only once plus the locations and orientations (poses) of their occurrences.

- Curves are specified in a not yet discretized (e.g. not yet triangulated) way. See: Non-destructive modelling

- Complex (and perhaps even dynamic) assemblies are also encoded such that they complexly unfold on code execution.

Laying out gemstone based metamaterials in complex dynamically interdigitating/interlinking/interweaving ways.

Note: "Programmatic" does not necessarily mean purely textual and in: "good old classical text editors".

Structural editors might and (as to the believe of the author) eventually will take over

allowing for an optimal mixing of textual and graphical programmatic representation of target products in the "integrated deveuser interfaces".

The decompression chain in gem-gum factories (and 3D printers)

The list goes:

- from top high level small data footprint

- to bottom low level large data footprint

- high language 1: functional, logical, connection to computer algebra system

- high language 2: imperative, functional

- Volume based modeling with "level set method" or even "signed distance fields"

(organized in CSG graphs which reduce to the three operations: sign-flip, sum and maximum) - Surface based modeling with parametric surfaces (organized in CSG graphs)

- quadric nets C1 (rarely employed today 2017)

- triangle nets C0

- tool-paths

- Primitive signals: step-signals, rail-switch-states, clutch-states, ...

(TODO: add details to decompression chain points)

High level language

Batch processing style programmatic 3D modelling lends itself exceptionally nicely to

side effect free programmatic representation.

Side effect free means: Functions always give the same output when given the same explicit input.

There is no funny hidden implicit global variable passing business going on in the background.

Sticking to code that is by some means enforced to be free of side effects comes with great benefits like:

- mathematical substitutability

- irrelevance of order of code lines

- narrowing down errors predictably leading to the problem spot

no horrid intractable errors that a excellent in refusing to be narrowed down - ...

One side effect free system of today (2021) is OpenSCAD but

it lacks in many other regards (outlined on the linked page)

There are quite a few clones of OpenSCAD attempting to fix these other regards but

more often than not at the extremely high cost of abandoning the guaranteed lack of side effects.

In case of the case of embedded doman specific languages (EDSLs) this weakness is usually inherited from the the host language.

(Javascript, Python, your imperative side effect laden language of choice ...)

Another side effect free 3D modelling system of today (2021) is implicitCAD but

it lacks in many other regards (outlined on the linked page).

Perhaps even more so than OpenSCAD but in other areas.

All of today's (2021) graphical point and click 3D modelling systems seem hopelessly insufficient compared to

what would be desirable for modelling something as complex as a gemstone based on chip factory.

This is mostly because the visual-textual rift problem is still far (decades?) from being resolved yet.

Quadric nets

This is highly unusual but seems interesting (state 2021).

One could say that in some way quadric nets are an intermediary representation of 3D geometry lying between

- general arbitrary function representation and

- triangulated representation.

Piecewise defined quadric mesh surfaces can be made to be one time continuously differentiable (C1).

See: Wikipedia Differentiability_classes)

(TODO: Find if pretty much all surfaces of practical interest van be "quadriculated" just as they can be "triangulated")

The second derivative of a function representation (in form of a scalar field) gives the Hesse matrix where

- it's eigenvectors give the principle curvatures and

- it's determinant giving the Gaussian curvature (smaller zero: saddle, equal zero: or valley/plane, bigger zero: or hill/through)

Side-note: Projections from 3D down to 2D form conic sections of same or lower degree.

These can be re-extrusion to 3D. It may be interesting to implement this functionality in programmatic 3D modelling tools.

It seems that calculating convex hulls is only possible for a few special cases.

This is different for triangle meshes where convex hulls are always possible.

Convex hulls can be quite useful in 3D modelling, but it seems they are only applicable quite far down the decompression chain.

Triangle nets

- There is an enormous mountain of theoretical work about them.

- Often it might be desirable to skip this step and go directly

–– from some functional representation

–– to toolpaths.

Today's (2021) FDM/FFF 3D-printers pretty much all go through the intermediary representation of triangle-meshes. - There are difficult since they offer a gazillion ways of bad geometries

(degenerate triangles, more than two edges meeting, vertices on edges, mesh holes, flipped normals, ...)

Tool-paths

Related:

Primitive signals

The many places Where the control-subsystem finally comes together with the power-subsystem.

Signals are amplified to do the driving of motions but also possibly energy recuperation from the motions of the nano-robotics.

Related:

(Compilation) Targets

Beside the actual physical product another desired product of the code is just a digital preview.

So there are several desired outputs for one and the same code.

Maybe useful for compiling the same code to different targets (as present in this context): Compiling to categories (Conal Elliott)

Possible desired outputs include but are not limited to:

- the actual physical target product object

- virtual simulation of the potential product (2D or some 3D format)

- approximation of output in form of utility fog?

3D modeling & functional programming

Modeling of static 3D models is purely declarative.

- example: OpenSCAD

...

Similar situations in today's computer architectures

- high level language ->

- compiler infrastructure (e.g. llvm) ->

- assembler language ->

- actual actions of the target data processing machine

Bootstrapping of the decompression chain

One of the concerns regarding the feasibility of advanced productive nanosystems is the worry that that all the necessary data cannot be fed to

- the mechanosynthesis cores and

- the crystolecule assembly robotics

The former are mostly hard coded and don't need much data by the way.

For example this size comparison in E. Drexlers TEDx talk (2015) 13:35 can (if taken to literally)

lead to the misjudgment that there is an fundamentally insurmountable data bottleneck.

Of course trying to feed yotabits per second over those few pins would be ridiculous and impossible, but that is not what is planned.

(wiki-TODO: move this topic to Data IO bottleneck)

We already know how to avoid such a bottleneck.

Albeit we program computers with our fingers delivering just a few bits per second

computers now perform petabit per second internally.

The goal is reachable by gradually building up a hierarchy of decompression steps.

The most low level most high volume data is generated internally and locally very near to where it's finally "consumed".

Decompression chain in nature

There is also necessarily some sort of decompression chain

in biological living systems like large animals and plants

It is very different (and incompatible) to the compression chain that we can expect to emerge in advanced productive nanosystems though.

Larger scale assembly in living biological systems is very different to convergent assembly in nanofactories.

In plans there are things like the meristem tissue.

And large scale parts cannot be shuffled around after the fact of large scale assembly.

Small scale assembly in living biological systems is very different to assembly in nanofactories.

- Proteins after their proteosynthesis plus folding-process are

integrated into more or less wobbly cell membranes or directly unconstrainedly into cytoplasma. - Crystolecules are after their mechanosynthesis are

placed into a specific spot that is specifiable in a global coordinate system.

Why are natural and artificial decompression chains fundamentally incompatible?

In case of the synthesis of food take the (exceptionally challenging) example of an apple.

It would obviously not be practically possible (and not sane) to store the location of ever atom.

- (A) that is waaaaaay too much data – as in the natural case we need a decompression chain

- (B) an apple is mostly liquid water with atoms zapping around like crazy at room temperature

– well one could shock-cryo-freeze an apple maybe

Synthesis of food:

An obvious approach would be to do data-compression on the location of molecules of the shock frozen apple

data-compression like it's e.g. done in lossy image compression

(or in the future with some more advanced AI based compression means ~ see deep-dream)

Such artificial data-compression would lead to some very weird and unnatural artifacts.

But that should be ok in the case of food.

- As long as nourishment value and smell (type of molecules on smallest scales) is sufficiently close and

- As long as the larger scale texture is sufficiently close.

No one wants a terrifying artificial Frankenstein apple in the uncanny valley of food texture, right?

See: Why identical copying is unnecessary for foodsynthesis

Synthesis of replacement organs:

In the case of living replacement organs weird compression artifacts

(like e.g. breaking cell walls or putting molecules at insufficiently accurate places) would be a showstopper problem.

But that problem is dwarfed by the problem that the mechanosynthesis of glassy shock frozen water

- sounds exceptionally difficult and may well be impossible - temporary holding of many weak many hydrogen bonds in odd barely stable angles

- would certainly be astronomically slow due to a ever shrinking surface area per volume to build on due to the lack of availability of convergent assembly

The proper way (proper decomprssion-chain) to synthesize biological products:

The much much more practical and proper way to go is microscale paste 3D printing of cultivated cells.

Having the DNA of an apple tree as the building plan for an apple does not help in the artificial synthesis of an apple

with an gem-gum tech based cell cultivating food synthesizer that operates more like a microscale paste printer.

Just a program/buildplan that is meant for such a food-synthesizer woud not work as a seed for an apple tree that can be buried ans sprouted.

One could however eventually make a system that behaves like a tree and sprouts gemstone based food synthesizers. More as a gag than practical.

Philosophical

The end result of natures evolution process led to some incredibly high degrees of data-compression.

Like the miraculous fact that the DNA build-plan for a whole human being fits into just a few GB of data (ignoring microbiome and epigenetics).

high data compression may limit degree of seperation of concerns – mabe?

Warning! you are moving into more speculative areas.

The extremely high levels of data-compression in living organisms may have come at the cost of

not much attainment of separation of concerns over the course of evolution.

The very non-local folding-behavior of proteins may be an example of such lack of separation of concerns.

Albeit in many cases we just might not understand it yet.

But perhaps just sufficient separation of concerns must have been attained such

that evolution can sufficiently well adapt to changing environmental conditions??

It's not that in nature there is no separation of concerns at all in the DNA of living organisms

Large scale continuous sections even sometimes correspond to whole body parts (as experiments with fruit-flies showed)

Artificial vs natural

Regarding separation of concerns:

- The buildup of the natural data-compression-chain (evolution) is top down

- The buildup of artificial data-compression-chain (3D modelling) is bottom up

System design by natural evolution is pretty brutal:

- initially does not care that it cannot change one parameter without changing half a dozen others too in weird ways (top down)

- The testing is fully offloaded to the end-users and running into a bug can literally be fatal

- DNA "source-code" is very much not designed to be read and understood

- There is no documentation whatsoever

Systems designed by humans:

- starts with simple base cases where all parameters can be adjusted cleanly separatedly (bottom up)

- then finds ways to make the code more general – less code covering more cases – can be partly related to loss of separation of concerns

- ideally the special cases are kept around for documentation on how and why the final "abstract nonsense" came to be

Given that human system design processes

- can build up slowly and steadily and given they

- are not limited by hat individual humans can understand

complexity of systems created by humans can steadily and stealthy creep up to levels that

at some point becomes so advanced that they can well be considered life like.

Related

- Control hierarchy

- Data compression

- The reverse: Finding a good compression/encoding.

While decompressing is a technique compressing is an art – (a vague analog to how derivation is a technique and integration an art)

See: the source of new axioms – Warning! you are moving into more speculative areas. - Why identical copying is unnecessary for foodsynthesis and Synthesis of food

In the case of synthesis of food the vastly different decompression chain between biological systems and advanced diamondoid nanofactories leads to the situation that nanofactories cannot synthesize exact copies of food down to the placement of every atom. See Food structure irrelevancy gap for a viable alternative. - constructive corecursion

- Data IO bottleneck

- Compiling to categories (Conal Elliott)

- mergement of GUI-IDE & code-IDE

External Links

Wikipedia

- Solid_modeling, Computer_graphics_(computer_science)

- Constructive_solid_geometry

- Related to volume based modeling: Quadrics (in context of mathematical shapes) (in context of surface differential geometry)

- Related to volume based modeling: Level-set_method Signed_distance_function

- Avoidable in steps after volume based modeling: Triangulation_(geometry), Surface_triangulation