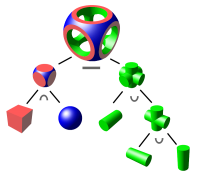

Data decompression chain

The "data decompression chain" is the sequence of expansion steps from

- very compact highest level abstract blueprints of technical systems to

- discrete and simple lowest level instances that are much larger in size.

Contents

3D modeling

(TODO: add details)

- high language 1: functional, logical, connection to computer algebra system

- high language 2: imperative, functional

- Volume based modeling with "level set method" or even "signed distance fields"

(organized in CSG graphs which reduce to the three operations: sign-flip, sum and maximum) - Surface based modeling with parametric surfaces (organized in CSG graphs)

- quadric nets C1 (rarely employed today 2017)

- triangle nets C0

- tool-paths

- Primitive signals: step-signals, rail-switch-states, clutch-states, ...

Targets

- physical object

- virtual simulation

Maybe useful for compiling the same code to different targets (as present in this context): Compiling to categories (Conal Elliott)

3D modeling & functional programming

Modeling of static 3D models is purely declarative.

- example: OpenSCAD

...

Similar situations in today's computer architectures

- high level language ->

- compiler infrastructure (e.g. llvm) ->

- assembler language ->

- actual actions of the target data processing machine

Bootstrapping of the decompression chain

One of the concerns regarding the feasibility of advanced productive nanosystems is the worry that that all the necessary data cannot be fed to

- the mechanosynthesis cores and

- the crystolecule assembly robotics

The former are mostly hard coded and don't need much data by the way.

For example this size comparison in E. Drexlers TEDx talk (2015) 13:35 can (if taken to literally)

lead to the misjudgment that there is an fundamentally insurmountable data bottleneck.

Of course trying to feed yotabits per second over those few pins would be ridiculous and impossible, but that is not what is planned.

(wiki-TODO: move this topic to Data IO bottleneck)

We already know how to avoid such a bottleneck.

Albeit we program computers with our fingers delivering just a few bits per second

computers now perform petabit per second internally.

The goal is reachable by gradually building up a hierarchy of decompression steps.

The most low level most high volume data is generated internally and locally very near to where it's finally "consumed".

Related

- Control hierarchy

- mergement of GUI-IDE & code-IDE

- The reverse: while decompressing is a technique compressing is an art - (a vague analog to derivation and integration)

See: the source of new axioms Warning! you are moving into more speculative areas. - Why identical copying is unnecessary for foodsynthesis and Synthesis of food

In the case of synthesis of food the vastly different decompression chain between biological systems and advanced diamondoid nanofactories leads to the situation that nanofactories cannot synthesize exact copies of food down to the placement of every atom. See Food structure irrelevancy gap for a viable alternative. - constructive corecursion

- Data IO bottleneck

- Compiling to categories (Conal Elliott)

External Links

Wikipedia

- Solid_modeling, Computer_graphics_(computer_science)

- Constructive_solid_geometry

- Related to volume based modeling: Quadrics (in context of mathematical shapes) (in context of surface differential geometry)

- Related to volume based modeling: Level-set_method Signed_distance_function

- Avoidable in steps after volume based modeling: Triangulation_(geometry), Surface_triangulation