Difference between revisions of "Assembly layer"

(→Layers as natural choice) |

m (→Slowdown through stepsize: added link Math of convergent assembly) |

||

| (49 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | {{ | + | {{Template:Site specific definition}} |

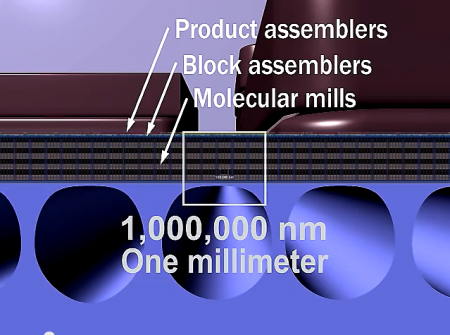

| + | [[File:productive-nanosystems-video-snapshot.png|thumb|450px|Cross section through a nanofactory showing the lower assembly levels vertically stacked on top of each other. Image from the official "[[Productive Nanosystems From molecules to superproducts|productive nanosystems]]" video. '''The most part of the stack are the bottom layers. [[Convergent assembly]] happens very thin at the top.''']] | ||

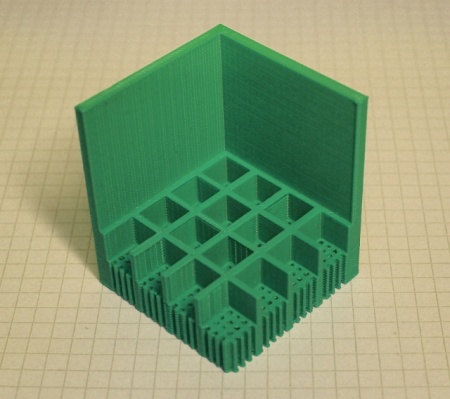

| − | The layers in a [[nanofactory]] are the [[assembly levels]] mapped to the [[assembly layers]] interspersed by [[routing layers]]. | + | [[File:Bottom_layers_and_convergent_assembly_layers.JPG|thumb|450px|This is an '''extremely simplified''' model of the layer structure of a AP small scale factory. '''The stack of bottom layers (fine tubes in the image) is reduced in height by two to three orders of magnitude!''' The size steps above will likely be bigger than x4 in a practical system. Size steps of x32 allow for easy reasoning since they are nicely visualizable and two steps (32^2) make about a round 1000fold size increase.]] |

| + | |||

| + | The layers in a stratified [[nanofactory]] are the [[assembly levels]] mapped to the [[assembly layers]] interspersed by lockout [[routing layers|routing]] and other layers. Note that here the levels refer to abstract order and layers to physical parallel stacked sheets. | ||

== Layers as natural choice == | == Layers as natural choice == | ||

| − | [[Scaling laws]] say that when '''halfing the size''' of | + | [[Scaling laws]] say that ('''assuming scale invariant operation speeds''') when '''halfing the size''' of some generalized assembly unit one can put '''four such units below'''. Those are '''twice as fast''' and '''produce each an eight''' of the amout of product the upper unit produces. Multiplied together one sees that the top layer and the layer with units of halve size below have exactly the same throughput. This works not just with halving the size but with any subdivision. |

| − | '''All layers in an arbitrarily deep stack (with equivalent step sizes) have equal throughput.''' | + | '''All layers in an arbitrarily deep stack (with equivalent step sizes) of cube shaped units have equal throughput.''' <br> |

| + | Related: [[Higher throughput of smaller machinery]] | ||

| + | |||

| + | Especially the upper [[convergent assembly]] layers very much behave scale invariant. | ||

| + | At the bottommost assembly layers the lower physical size limit becomes relevant. | ||

| + | That is manipulators cannot be as small or smaller than [[Moiety|Moieties]]). | ||

| + | This and the fact that one needs too slow down slightly from m/s to cm/s or mm/s speeds to prevent excessive waste heat. | ||

| + | distorts this scale invariancy somewhat. Stacks of identically sized sub-layers that thread by finished [[diamondoid molecular elements|DMEs]] are sensible at the stacks bottom at the smallest scales. | ||

| + | |||

| + | === Optimizing operation speeds across assembly layers === | ||

| + | |||

| + | If instead of keeping operation speeds constant across the convergent assembly layers (which is far from optimal) | ||

| + | one wants to keep constant the '''power dissipation per volume''' parameter across the convergent assembly layers | ||

| + | then the operation speeds must be raised when going up convergent assembly layers to larger scales. <br> | ||

| + | (Note: Speeds not frequencies! Precise use of language needed here.) | ||

| + | |||

| + | Let's first try identifying underused potential if speeds are kept constant from the bottom-most assembly layers. | ||

| + | Assuming bottom most speeds for low losses in the mm/s range. | ||

| + | |||

| + | === (Falsely) assuming constant operation speeds across the convergent assembly layers === | ||

| + | |||

| + | When going up the assembly layers the total-internal-bearing-surface-per-volume falls quickly. | ||

| + | This is due to the widely known scaling law of "higher surface area of smaller things (including machinery)". | ||

| + | So going up the convergent assembly layers to bigger sized would make losses fall (linearly). | ||

| + | |||

| + | Perhaps surprisingly increasing surface area for bigger scale assembly layers further drops losses. | ||

| + | (See also: [[Increasing bearing area to decrease friction]].) | ||

| + | That is: If the bearing surface it is instead kept constant by making bigger bearings multi shelled like described on page [[infinitesimal bearings]], then operation speed distributes over the bearing sub-layers and is thus lowered. | ||

| + | More layers make friction rise linearly, but the drop in bearing sub-layer speed (operation speeds are still assumed constant across assembly layers!) makes the dynamic friction losses drop quardatically. So combining the two effects (bearing sub-area-up & bearing-subspeed-down) the friction losses drops linearly. This is atop the linear drop from before. | ||

| + | |||

| + | The two former (scaling law & infinitesimal bearing trick) combined give a quadratic drop in dynamic friction losses going up the assembly layers. This would be massive underused potential. Instead we want to speed up operation speeds when going up the convergent assembly layers. | ||

| + | |||

| + | === Higher speeds for larger scale convergent assembly layers === | ||

| + | |||

| + | The the total volume of the larger scale assembly layers is smaller than the the total volume of the bottom-most assembly layers due to factory style optimizations in [[convergent assembly]]. Thus part of the increase in operation speeds is natural and mandatory anyways. Going beyond that … | ||

| + | |||

| + | When using higher speeds at the higher convergent assembly levels one can either consent to being only able to use those speeds for recycling of pre-produced parts (just swapping front and back wheels of a car in the extreme of large scales) or one needs to change the [[nanofactory]] to a more fractal design with increasing branching at the bottom end of the stack of convergent assembly. See more general concept: [[Assembly levels]]. | ||

| + | |||

| + | Going to the extreme at some point speeds get limited through acceleration forces (a spinning thin-walled tube ring made from nanotubes ruptures at around 3km/s independent of scale) much sooner mechanical resonances and probably some other problems will occur (acceleration & breaking losses?). Related: [[Unsupported rotating ring speed limit]] | ||

| + | |||

| + | == Slowdown through stepsize == | ||

| + | |||

| + | Increasing the size of a step between layers slows down the throughput due to a shrinking number of manipulators per surface area. | ||

| + | In the extreme case one has one scanning probe microscope for a whole [//en.wikipedia.org/wiki/Mole_%28unit%29 mole] of particles. There it would take times way beyond the age of the universe to assemble anything human hand sized. | ||

| + | This by the way is the reason why massive parallelity gained by [[bootstrapping methods for productive nanosystems]] is an absolute necessary. | ||

| + | |||

| + | Increased stepsizes bring the benefit of less design restrictions in the products (fewer borders). | ||

| + | The slowdown incurred by bigger stepsizes can in bounds be compensated with parallelity in parts assembly. | ||

| + | To avoid a bottleneck all stepsizes in the stack of assembly layers should be similar. | ||

| + | |||

| + | See main page: [[Branching factor]] <br> | ||

| + | Also: [[Math of convergent assembly]] | ||

== Consequence of lack of layers == | == Consequence of lack of layers == | ||

| − | Since every layer has the same productivity (mass per time) the very thin bottommost layer has the same productivity as the (practically or hypothetically implementet) uppermost convergent assembly layer - a single cube with the size of the sidelength of the factory. | + | Using a tree structure instead of a stack means halving the size leads to more then four subunits and the upper [[convergent assembly]] layers can potentially become a bottleneck. |

| + | |||

| + | Since (when assuming constant operating speeds across all assembly layers) every layer has the same productivity (mass per time) the very thin bottommost layer has the same productivity as the (practically or hypothetically implementet) uppermost [[convergent assembly]] layer - a single cube with the size of the sidelength of the factory. This lets the density of productivity (productivity per volume) explode - but there are issues. <br> | ||

| + | See: [[Higher throughput of smaller machinery]] | ||

== Maximizing productivity == | == Maximizing productivity == | ||

| − | For all but the most extreme applications a stratified design | + | For all but the most extreme applications a stratified design should work well. |

Going beyond that it becomes tedious. As an analogy one can compare it to going from very useful PCs to more specialised grapic cards. | Going beyond that it becomes tedious. As an analogy one can compare it to going from very useful PCs to more specialised grapic cards. | ||

| − | Filling the whole Factory volume with the immense productivity density of the bottommost layer(s) of a stratified design would lead to unhandlable requirements for product expulsion (too high accelerations) and ridiculousdly high waste heat that could even with advanced [[heat | + | Filling the whole Factory volume with the immense productivity density of the bottommost layer(s) of a stratified design would lead to unhandlable requirements for product expulsion (too high accelerations) and ridiculousdly high waste heat that could probably even with advanced [[thermal energy transmission|heat transportation]] only be handled for very short peaks. ('''Todo:'''check when it becomes possible). See: [[productivity explosion]]. |

Sensible working designs for continuous maximum performance cannot fill a whole cube volume with an implementation of the bottommost [[assembly levels]] but need a complicated an unflexible 3D fractal design. If one goes to the limits the cooling facilities will become way bigger than the factory. | Sensible working designs for continuous maximum performance cannot fill a whole cube volume with an implementation of the bottommost [[assembly levels]] but need a complicated an unflexible 3D fractal design. If one goes to the limits the cooling facilities will become way bigger than the factory. | ||

Deviating from the stack structure to get more volume than the bottommost layer in a stratified design where actual mechanosynthesis happens | Deviating from the stack structure to get more volume than the bottommost layer in a stratified design where actual mechanosynthesis happens | ||

* makes system design onsiderably harder (less scale invariance, harder post design system adjustability) | * makes system design onsiderably harder (less scale invariance, harder post design system adjustability) | ||

| − | * may lead to a | + | * may lead to a bottleneck at the upper [[convergent assembly]] levels. |

| − | === | + | === Compact monolithic diamondoid molecular assemblers === |

| − | + | In the early (and now outdated) "universal diamondoid [[molecular assembler]]" concept space is also filled completely with production machinery. | |

| − | + | But in this concept there is a lot lower volumetric density of locations where mechanosynthesis takes place compared to [[gem-gum factories]]. | |

| − | + | That is molecular assemblers would freature few and big and slow [[mechanosynthesis core]]s due to their general purpose applicability requirement. | |

| − | + | So for the molecular assembler concept there might not be a bottleneck problem despite of the productive devices filling the whole volume. | |

| − | == | + | The actual problems with molecular assemblers are: |

| + | * Limited space for the integration of a second [[assembly level]] beyond the first one. Here not organizable as not layers. And … | ||

| + | * If assembly levels are "simplified" then product assembly design gets severely complicateddue to the lack of intermediate standard part handling capabilities. | ||

| + | * The product growth obstructing molecular assembler crystal scaffold that needs logic for mobility and coordinated motien that lies in complecity at or even above what would be needed for [[microcomponent maintenance microbot]]s. | ||

| + | |||

| + | == Delineation to microcomponent maintenance microbots == | ||

| + | |||

| + | * In the diamondoid molecular assemblers concept they are supposed to be capable of self replication given just molecular feedstock. | ||

| + | * Microcomponent maintenance microbots usually are incapable of self-replication. And if they are then need their [[crystolecule]]s supplied as [[vitamins]]. | ||

| + | |||

| + | ----- | ||

| + | |||

| + | In the diamondoid molecular assemblers concept they are usually assumed to perform assembly at the [[fist assembly level]] | ||

| + | and perhaps the second assembly level at best. | ||

| + | Assembly should happen in their internal [[building chamber]]. | ||

| + | Usually in the past it where assumed there is just a single internal building chamber. | ||

| + | And outside in a volume that is somehow very well fenced off against inundation of air. | ||

| + | |||

| + | [[Microcomponent maintenance microbot]]s would usually not feature any | ||

| + | [[piezochemical mechanosynthesis|mechanosynthetic capabilities]] on the [[first assembly level]]. | ||

| + | Thery would rather rather only freature assembly capabilities at the [[second assembly level]] and [[third assembly level]]. | ||

| + | |||

| + | == Large scale == | ||

| + | |||

| + | What about building whole houses skyscrapers cities or even giant space station? | ||

| − | + | In large scales mass becomes increasingly relevant and may begin to pose a top level bottleneck. | |

| − | + | But this is far off. | |

| − | This | + | Unplanned working is likely to emerge when time is not critical. |

| + | This would look like many nanofactories operated at different locations at the same time in a semi manual style. | ||

| + | This is a fractal style of manufacturing at the makro scale with very low throughput compared to what would be possible with even a single simple stratified nanofactory. | ||

| − | + | == Related == | |

| − | + | ||

| − | + | ||

| + | * '''[[Gemstone metamaterial on chip factory]]''' | ||

| + | * [[Convergent assembly]] | ||

| + | * '''[[Assembly levels]]''' | ||

| + | * [[Level throughput balancing]] | ||

| + | * [[Distorted visualization methods for convergent assembly]] | ||

| + | * [[Gemstone metamaterial on chip factory]] | ||

| + | * '''[[Productive Nanosystems From molecules to superproducts]]''' | ||

[[Category:General]] | [[Category:General]] | ||

[[Category:Nanofactory]] | [[Category:Nanofactory]] | ||

| + | [[Category:Site specific definitions]] | ||

Latest revision as of 10:45, 11 February 2024

The layers in a stratified nanofactory are the assembly levels mapped to the assembly layers interspersed by lockout routing and other layers. Note that here the levels refer to abstract order and layers to physical parallel stacked sheets.

Contents

Layers as natural choice

Scaling laws say that (assuming scale invariant operation speeds) when halfing the size of some generalized assembly unit one can put four such units below. Those are twice as fast and produce each an eight of the amout of product the upper unit produces. Multiplied together one sees that the top layer and the layer with units of halve size below have exactly the same throughput. This works not just with halving the size but with any subdivision.

All layers in an arbitrarily deep stack (with equivalent step sizes) of cube shaped units have equal throughput.

Related: Higher throughput of smaller machinery

Especially the upper convergent assembly layers very much behave scale invariant. At the bottommost assembly layers the lower physical size limit becomes relevant. That is manipulators cannot be as small or smaller than Moieties). This and the fact that one needs too slow down slightly from m/s to cm/s or mm/s speeds to prevent excessive waste heat. distorts this scale invariancy somewhat. Stacks of identically sized sub-layers that thread by finished DMEs are sensible at the stacks bottom at the smallest scales.

Optimizing operation speeds across assembly layers

If instead of keeping operation speeds constant across the convergent assembly layers (which is far from optimal)

one wants to keep constant the power dissipation per volume parameter across the convergent assembly layers

then the operation speeds must be raised when going up convergent assembly layers to larger scales.

(Note: Speeds not frequencies! Precise use of language needed here.)

Let's first try identifying underused potential if speeds are kept constant from the bottom-most assembly layers. Assuming bottom most speeds for low losses in the mm/s range.

(Falsely) assuming constant operation speeds across the convergent assembly layers

When going up the assembly layers the total-internal-bearing-surface-per-volume falls quickly. This is due to the widely known scaling law of "higher surface area of smaller things (including machinery)". So going up the convergent assembly layers to bigger sized would make losses fall (linearly).

Perhaps surprisingly increasing surface area for bigger scale assembly layers further drops losses. (See also: Increasing bearing area to decrease friction.) That is: If the bearing surface it is instead kept constant by making bigger bearings multi shelled like described on page infinitesimal bearings, then operation speed distributes over the bearing sub-layers and is thus lowered. More layers make friction rise linearly, but the drop in bearing sub-layer speed (operation speeds are still assumed constant across assembly layers!) makes the dynamic friction losses drop quardatically. So combining the two effects (bearing sub-area-up & bearing-subspeed-down) the friction losses drops linearly. This is atop the linear drop from before.

The two former (scaling law & infinitesimal bearing trick) combined give a quadratic drop in dynamic friction losses going up the assembly layers. This would be massive underused potential. Instead we want to speed up operation speeds when going up the convergent assembly layers.

Higher speeds for larger scale convergent assembly layers

The the total volume of the larger scale assembly layers is smaller than the the total volume of the bottom-most assembly layers due to factory style optimizations in convergent assembly. Thus part of the increase in operation speeds is natural and mandatory anyways. Going beyond that …

When using higher speeds at the higher convergent assembly levels one can either consent to being only able to use those speeds for recycling of pre-produced parts (just swapping front and back wheels of a car in the extreme of large scales) or one needs to change the nanofactory to a more fractal design with increasing branching at the bottom end of the stack of convergent assembly. See more general concept: Assembly levels.

Going to the extreme at some point speeds get limited through acceleration forces (a spinning thin-walled tube ring made from nanotubes ruptures at around 3km/s independent of scale) much sooner mechanical resonances and probably some other problems will occur (acceleration & breaking losses?). Related: Unsupported rotating ring speed limit

Slowdown through stepsize

Increasing the size of a step between layers slows down the throughput due to a shrinking number of manipulators per surface area. In the extreme case one has one scanning probe microscope for a whole mole of particles. There it would take times way beyond the age of the universe to assemble anything human hand sized. This by the way is the reason why massive parallelity gained by bootstrapping methods for productive nanosystems is an absolute necessary.

Increased stepsizes bring the benefit of less design restrictions in the products (fewer borders). The slowdown incurred by bigger stepsizes can in bounds be compensated with parallelity in parts assembly. To avoid a bottleneck all stepsizes in the stack of assembly layers should be similar.

See main page: Branching factor

Also: Math of convergent assembly

Consequence of lack of layers

Using a tree structure instead of a stack means halving the size leads to more then four subunits and the upper convergent assembly layers can potentially become a bottleneck.

Since (when assuming constant operating speeds across all assembly layers) every layer has the same productivity (mass per time) the very thin bottommost layer has the same productivity as the (practically or hypothetically implementet) uppermost convergent assembly layer - a single cube with the size of the sidelength of the factory. This lets the density of productivity (productivity per volume) explode - but there are issues.

See: Higher throughput of smaller machinery

Maximizing productivity

For all but the most extreme applications a stratified design should work well. Going beyond that it becomes tedious. As an analogy one can compare it to going from very useful PCs to more specialised grapic cards.

Filling the whole Factory volume with the immense productivity density of the bottommost layer(s) of a stratified design would lead to unhandlable requirements for product expulsion (too high accelerations) and ridiculousdly high waste heat that could probably even with advanced heat transportation only be handled for very short peaks. (Todo:check when it becomes possible). See: productivity explosion. Sensible working designs for continuous maximum performance cannot fill a whole cube volume with an implementation of the bottommost assembly levels but need a complicated an unflexible 3D fractal design. If one goes to the limits the cooling facilities will become way bigger than the factory.

Deviating from the stack structure to get more volume than the bottommost layer in a stratified design where actual mechanosynthesis happens

- makes system design onsiderably harder (less scale invariance, harder post design system adjustability)

- may lead to a bottleneck at the upper convergent assembly levels.

Compact monolithic diamondoid molecular assemblers

In the early (and now outdated) "universal diamondoid molecular assembler" concept space is also filled completely with production machinery. But in this concept there is a lot lower volumetric density of locations where mechanosynthesis takes place compared to gem-gum factories. That is molecular assemblers would freature few and big and slow mechanosynthesis cores due to their general purpose applicability requirement. So for the molecular assembler concept there might not be a bottleneck problem despite of the productive devices filling the whole volume.

The actual problems with molecular assemblers are:

- Limited space for the integration of a second assembly level beyond the first one. Here not organizable as not layers. And …

- If assembly levels are "simplified" then product assembly design gets severely complicateddue to the lack of intermediate standard part handling capabilities.

- The product growth obstructing molecular assembler crystal scaffold that needs logic for mobility and coordinated motien that lies in complecity at or even above what would be needed for microcomponent maintenance microbots.

Delineation to microcomponent maintenance microbots

- In the diamondoid molecular assemblers concept they are supposed to be capable of self replication given just molecular feedstock.

- Microcomponent maintenance microbots usually are incapable of self-replication. And if they are then need their crystolecules supplied as vitamins.

In the diamondoid molecular assemblers concept they are usually assumed to perform assembly at the fist assembly level and perhaps the second assembly level at best. Assembly should happen in their internal building chamber. Usually in the past it where assumed there is just a single internal building chamber. And outside in a volume that is somehow very well fenced off against inundation of air.

Microcomponent maintenance microbots would usually not feature any mechanosynthetic capabilities on the first assembly level. Thery would rather rather only freature assembly capabilities at the second assembly level and third assembly level.

Large scale

What about building whole houses skyscrapers cities or even giant space station?

In large scales mass becomes increasingly relevant and may begin to pose a top level bottleneck. But this is far off. Unplanned working is likely to emerge when time is not critical. This would look like many nanofactories operated at different locations at the same time in a semi manual style. This is a fractal style of manufacturing at the makro scale with very low throughput compared to what would be possible with even a single simple stratified nanofactory.