Why ultra-compact molecular assemblers are too inefficient

Why too inefficient as a far term ideal target

For discussion in regards to near term systems see further below

Too low reaction density in spacetime

Mechanochemisty & mechanosynthesis has the (ovecompensateable) disadvantage compared to liquid phase chemistry that reaction sites are far apart due to the volume of machinery per reaction site. This scales by the cube of the size of machinery per reaction site. Speeding up to compensate for that is not an option as friction grows quadratically with speed. This might be another reason for why critics assumed GHz frequencies (that were never proposed) being necessary for practical throughput and would lead to way too much waste heat.

To get to a higher spacial reaction site density one needs to eventually specialize the mechanosynthesis and hard-code the operations. This leads to factory style systems with production lines for specialized parts.

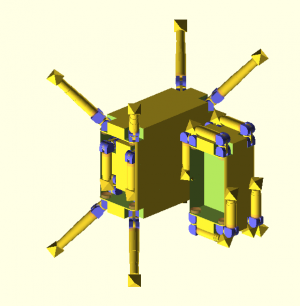

Specialized assembly lines pays off big time. Specialized assembly lines are not necessarily restrictive in part-types. More specialized assembly lines just makes a minimal complete nanofactory pixel a bit bigger. Well there'll be a few software controllable general purpose mechanosynthesis units akin to just the manipulators part of molecular assemblers. There's no need for one "replication backpack" per manipulator. And there'll be only rather few of these ones compared to the other specialized ones.

As an interesting side-note: Streaming kind of motions are an option but as inertia at proposed 5mm/s and diamond stiffness plays little role smooth motions along curves are by far not as critical as in maccroscale factories. Smoother trajectories may be preferable, a thing to investigate.

Related: There is limited room at the bottom, Atom placement frequency,

Bottom scale assembly lines in gem-gum factories, Molecular mill

Replication backpack overhead (BIG ISSUE)

Every assembler needs a "replication backpack" that is: For each general purpouse mecchanosynthesis manipulator stage (plus stick-n-place stage). the entire machinery for self replication needs to be added. This makes the already big volue of the nonspecialized manipulater further blow up by a significant factor and makes the reaction site density drop down to abysmally low levels. An extremely slow system even when pushed to the limits making it run hot and wateful.

Mobility subsystem

In case of mobile molecular assemblers (most proposals).

The replication packpack includes also a mobility system per

every single mechanosyntesis manipulator stage adding further bloat.

For an example of non-mobile molecular assemblers (featuring all the issues discussed here)

See Chris Phoenix early "nanofactory" analysis.

Links here: Discussion of proposed nanofactory designs

Not really a nanofactory as sepcialization, optimization, and open modularity of a factory are all absent.

Level throughput balancing mismatch

If the proposal even suggests to avoid likely hellishly difficult direct in-place mechanosynthesis

then (due ti the per-definition ultra compactness requirement) there's just space left for a single stick-n-place crystolecule manipulator. This manipulator is massively underfed starved. Most of the time it needs to wait around for the next crystolecule to be finished.

See related page: level throughput balancing

Putting a few stick-n-place crystolecule manipulators outside of molecular assemblers breaks the the typical monolithicity assumption of molecular assemblers. So this first improvement already moves away from the molecular assembler concept.

Inferior convergent assembly

Related: One misses out on all the benefits that convergent assembly has to offer. Well, outside of molecular assemblers one could in principle emulate convergent assembly. Using some complex swarm behavior. But that is likely not favorable to actual convergent assembly both in system complexity and system efficiency. (fractal growth speedup limit)

General-purpouse-only consequences

Abusive underuse of general purpose capability

In a productive nanosystem of larger scale there will be a need for an incredible large numbers of exactly equal standard parts. Using a general purpose assembler for cranking out gazillions of exactly equal standard parts is not making use of the general purpose capability at all.

Unnecessarily long travel distance for molecule fragments

A general purpose mechanosyntheis manipulater is necessarily significantly bigger than a highly optimized special purpouse station hardcoded for a specific mechanosynthetic operation. Btw: Hardcoding only means lack of life reprogrammabiluity during runtime. Disassembly and reassembly in a nanofactory can reprogram that hard-coding in a good design.

Consequences of insufficient space for specialization on specific part types

As hypothetical advanced systems:

A huge loss due to the enormous quantities of crystolecules in macroscopic structures <vr>

Exactly identical standard parts will be needed in these quantities.

As hypothetical early systems:

Due to the overly big size of the mechanosynthesis stages stemming from their general purpose nature

These stages are significantly slower than specialized systems.

Yes, indeed, faster specialized systems would not be sensible in early direct path systems but.

The slower speed means that for halfway reasonable level throughput balancing

a reasonable number-ratio of mechanosynthesis stages to stick-n-place stages becomes quite big.

This is making it even less reasonable to put a stick-n-place stage into a monolithic molecular assembler.

This stage would be extremely underused spending most of it's time waiting around idly.

Analogy with electronic chip design having large specialized subsystems for efficiency

Molecular assemblers are like computromium in the sense of maximal small scale homogenity. Most closely to computronium come PLAs (programmable logic arrays) that can implement any logical function and can recurse to make finite state machines. Practically there are FPGAs which have a little bit of specialisation beyond a plain monolithic PLA. FPGAs are possible and useful in niche applications cases.

The inefficiency overhead in the case of assemblers over nanofactories is likely much bigger though.

Mismatch to physical scaling laws

The scaling law of higher throughput of smaller machinery tells us that

a well designed system can have very high throughput densities.

That is product-volume per time per machinery volume.

This makes a thin layer chip like design the a natural choice.

For an intuitive image imagine a smartphone screen but

instead of RGB pixels emulating colors there is much smaller

nano- and micro-oroborics putting a dozen or so types gemstones together

emulating soft squishy materials stuff or whatever (gemstone based metamaterials).

Filling a whole volume with this kind of productivity would be pointless

as the ten tons per liter per second produced or so just can't be removed fast enough and would likely be impossible to cool even with the means of diamondoid technology.

Molecular assemblers on the other hand would fill the entire volume and

still be slower than a nanofactory chip.

One gets the hassle of eventually needing to seperate the products from the assemblers or vice versa.

One gets the fractal growth speedup limit.

Why too inefficient as a near term bootstrapping pathway

Note that this is direct path context.

Similar things to the above hold with few caveats.

Efficiency is obviously less important in early systems,

In early systems difficulty in the building of these systems is a bigger factor:

See: Why ultra-compact molecular assemblers are too difficult

Still, if inefficiencies make speeds drop a giant amount like

from the replication backpack overhead it's problem.

Obviousy fullblown hyperspecialized assembly lines are totally not an option in early systems BUT …

there is no need …

- … to have one replication packpack per mechanosynthetic manipulator stage

- … to have a closed system (adjacent systems can interleave)

- … for an expandic vacuum shell – See: Vacuum lockout

- … to stubbornly attempt to force selfreplicatibity into a package of predifined size

given by ones non-relpicative preproduction limits using a single macroscale SPM needle-tip.

Instead systems can be granted the size they actually want to have to become selfreplicative

When going for such a more sensible early nanosystem pixel (direct path) what one gets …

- is an early rather inefficient quite compact but not ultra-compact self replicative pixel

- is most definitely no longer a bunch of "molecular assemblers tacked to a surface".

There just are no monolithic selfreplicative nonobots here.

See: Why nanofactories are not molecular assemblers tacked to a surface.

Nonlinear increase in inefficiency and building difficulty:

For selfreplicating systems their inefficiency and their "selfreplication for the sake of selfreplication" factor

grows nonlinearly with system compactness. Well, it's worse for system construction difficulty. There the nonliearity is hyperbolic with a singularity. Beyond a certain size it's fundamentally impossible.

And with pre-defining the size (often suggested a cube with 100nm sidelength) and insisting that full monolithic relfreplicativity must fit within the difficulty might be stratospheric. Especially with early primitive means.

Delineation

A thing that loosk superficially similar are Microcomponent maintenance microbots.

Most of the things discussed here do not apply there.

While these are mobile and manipulating these are at a larger scale and not selfreplicative.

Not even selfreplicative at with crystolecules being a vitamin dependence.

Related

- Up: Molecular assembler

- Proto-assembler

- Molecular assemblers as advanced productive nanosystem (outdated)

- Why ultra-compact molecular assemblers are too difficult

- Why ultra-compact molecular assemblers are not desirable

- Why nanofactories are not molecular assemblers tacked to a surface

- Early nanosystem pixel (direct path)

- Direct path

Contents

[hide]- 1 Why too inefficient as a far term ideal target

- 1.1 Too low reaction density in spacetime

- 1.2 Replication backpack overhead (BIG ISSUE)

- 1.3 Mobility subsystem

- 1.4 Level throughput balancing mismatch

- 1.5 General-purpouse-only consequences

- 1.6 Analogy with electronic chip design having large specialized subsystems for efficiency

- 1.7 Mismatch to physical scaling laws

- 2 Why too inefficient as a near term bootstrapping pathway

- 3 Related